Does AI actually help students learn? A recent experiment in a high school provides a cautionary tale.

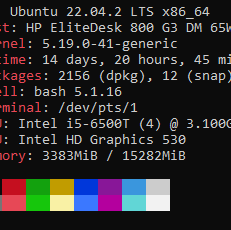

Researchers at the University of Pennsylvania found that Turkish high school students who had access to ChatGPT while doing practice math problems did worse on a math test compared with students who didn’t have access to ChatGPT. Those with ChatGPT solved 48 percent more of the practice problems correctly, but they ultimately scored 17 percent worse on a test of the topic that the students were learning.

A third group of students had access to a revised version of ChatGPT that functioned more like a tutor. This chatbot was programmed to provide hints without directly divulging the answer. The students who used it did spectacularly better on the practice problems, solving 127 percent more of them correctly compared with students who did their practice work without any high-tech aids. But on a test afterwards, these AI-tutored students did no better. Students who just did their practice problems the old fashioned way — on their own — matched their test scores.

Traditional instruction gave the same result as a bleeding edge ChatGPT tutorial bot. Imagine what would happen if a tiny fraction of the billions spent to develop this technology went into funding improved traditional instruction.

Better paid teachers, better resources, studies geared at optimizing traditional instruction, etc.

Move fast and break things was always a stupid goal. Turbocharging it with all this money is killing the tried and true options that actually produce results, while straining the power grid and worsening global warming.

Investing in actual education infrastructure won’t get VC techbros their yachts, though.

It’s the other way round: Education makes for less gullible people and for workers that demand more rights more freely and easily - and then those are coming for their yachts…

Imagine all the money spent on war would be invested into education 🫣what a beautiful world we would live in.

And cracking open a book didn’t demolish the environment. Weird.

Traditional instruction gave the same result as a bleeding edge ChatGPT tutorial bot.

Interesting way of looking at it. I disagree with your conclusion about the study, though.

It seems like the AI tool would be helpful for things like assignments rather than tests. I think it’s intellectually dishonest to ignore the gains in some environments because it doesn’t have gains in others.

You’re also comparing a young technology to methods that have been adapted over hundreds of thousands of years. Was the first automobile entirely superior to every horse?

I get that some people just hate AI because it’s AI. For the people interested in nuance, I think this study is interesting. I think other studies will seek to build on it.

The point of assignments is to help study for your test.

Homework is forced study. If you’re just handed the answers, you will do shit on the test.

The point of assignments is to help study for your test.

To me, “assignment” is more of a project. Not rote practice. Applying knowledge to a bit of a longer term, multi-part project.

The education system is primarily about controlling bodies and minds. So any actual education is counter-productive.

LLMs/GPT, and other forms of the AI boogeyman, are all just a tool we can use to augment education when it makes sense. Just like the introduction of calculators or the internet, AI isn’t going to be the easy button, nor is it going to steal all teachers’ jobs. These tools need to be studied, trained for, and applied purposely in order to be most effective.

EDIT: Downvoters, I’d appreciate some engagement on why you disagree.

are all just a tool

just a tool

it’s just a tool

a tool is a tool

all are just tools

it’s no more than a tool

it’s just a tool

it’s a tool we can use

one of our many tools

it’s only a tool

these are just tools

a tool for thee, a tool for meguns don’t kill people, people kill people

the solution is simple:

teach drunk people not to shoot their guns so much

unless they want to

that is the American waytanks don’t kill people, people kill people

the solution is simple:

teach drunk people not to shoot their tanks so much

the barista who offered them soy milk

wasn’t implying anything about their T levels

that is the American wayThanks for reminding me that AI is just tools, friend.

My memory is not so good.

I often can’t

rememberOk, I’m going to reply like you’re being serious. It is a tool and it’s out there and it’s not going anywhere. Do we allow ourselves to imagine how it can be improved to help students or do we ignore it and act like it won’t ever be something students need to learn?

It is a tool

Yeah, I agree. I wrote twelve lines about that.

I don’t even know of this is ChatGPT’s fault. This would be the same outcome if someone just gave them the answers to a study packet. Yes, they’ll have the answers because someone (or something) gave it to them, but won’t know how to get that answer without teaching them. Surprise: For kids to learn, they need to be taught. Shocker.

I’ve found chatGPT to be a great learning aid. You just don’t use it to jump straight to the answers, you use it to explore the gaps and edges of what you know or understand. Add context and details, not final answers.

The study shows that once you remove the LLM though, the benefit disappears. If you rely on an LLM to help break things down or add context and details, you don’t learn those skills on your own.

I used it to learn some coding, but without using it again, I couldn’t replicate my own code. It’s a struggle, but I don’t think using it as a teaching aid is a good idea yet, maybe ever.

There are lots of studies out there, and many of them contradict each other. Having a study with references contribute to the discussion, but it isn’t the absolute truth.

I wouldn’t say this matches my experience. I’ve used LLMs to improve my understanding of a topic I’m already skilled in, and I’m just looking to understand something nuanced. Being able to interrogate on a very specific question that I can appreciate the answer to is really useful and definitely sticks with me beyond the chat.

deleted by creator

Kids who take shortcuts and don’t learn suck at recalling knowledge they never had…

The only reason we’re trying to somehow compromise and allow or even incorporate cheating software into student education is because the tech-bros and singularity cultists have been hyping this technology like it’s the new, unstoppable force of nature that is going to wash over all things and bring about the new Golden Age of humanity as none of us have to work ever again.

Meanwhile, 80% of AI startups sink and something like 75% of the “new techs” like AI drive-thru orders and AI phone support go to call centers in India and Philippines. The only thing we seem to have gotten is the absolute rotting destruction of all content on the internet and children growing up thinking it’s normal to consume this watered-down, plagiarized, worthless content.

deleted by creator

I took German in high school and cheated by inventing my own runic script. I would draw elaborate fantasy/sci-fi drawings on the covers of my notebooks with the German verb declensions and whatnot written all over monoliths or knight’s armor or dueling spaceships, using my own script instead of regular characters, and then have these notebook sitting on my desk while taking the tests. I got 100% on every test and now the only German I can speak is the bullshit I remember Nightcrawler from the X-Men saying. Unglaublich!

Meanwhile the teacher was thinking, “interesting tactic you’ve got there, admiring your art in the middle of a test”

God knows what he would have done to me if he’d caught me. He once threw an eraser at my head for speaking German with a Texas accent. In his defense, he grew up in a post-war Yugoslavian concentration camp.

Must be same era, my elderly off the boat Italian teacher in 90s Brooklyn used to hit me with his cane.

I just wrote really small on a paper in my glasses case, or hidden data in the depths of my TI86.

We love Nightcrawler in this house.

Good tl;dr

Actually if you read the article ChatGPT is horrible at math a modified version where chatGPT was fed the correct answers with the problem didn’t make the kids stupider but it didn’t make them any better either because they mostly just asked it for the answers.

At work we give a 16/17 year old, work experience over the summer. He was using chatgpt and not understanding the code that was outputing.

I his last week he asked why he doing print statement something like

print (f"message {thing} ")Sounds like operator error because he could have asked chatGPT and gotten the correct answer about python f strings…

Students first need to learn to:

- Break down the line of code, then

- Ask the right questions

The student in question probably didn’t develop the mental faculties required to think, “Hmm… what the ‘f’?”

A similar thingy happened to me having to teach a BTech grad with 2 years of prior exp. At first, I found it hard to believe how someone couldn’t ask such questions from themselves, by themselves. I am repeatedly dumbfounded at how someone manages to be so ignorant of something they are typing and recently realising (after interaction with multiple such people) that this is actually the norm[1].

and that I am the weirdo for trying hard and visualising the C++ abstract machine in my mind ↩︎

No. Printing statements, using console inputs and building little games like tic tac toe and crosswords isn’t the right way to learn Computer Science. It is the way things are currently done, but you learn much more through open source code and trying to build useful things yourself. I would never go back to doing those little chores to get a grade.

I would never go back to doing those little chores to get a grade.

So either you have finished obtaining all the academic certifications that require said chores, or you are going to fail at getting a grade.

It all depends on how and what you ask it, plus an element of randomness. Remember that it’s essentially a massive text predictor. The same question asked in different ways can lead it into predicting text based on different conversations it trained on. There’s a ton of people talking about python, some know it well, others not as well. And the LLM can end up giving some kind of hybrid of multiple other answers.

It doesn’t understand anything, it’s just built a massive network of correlations such that if you type “Python”, it will “want” to “talk” about scripting or snakes (just tried it, it preferred the scripting language, even when I said “snake”, it asked me if I wanted help implementing the snake game in Python 😂).

So it is very possible for it to give accurate responses sometimes and wildly different responses in other times. Like with the African countries that start with “K” question, I’ve seen reasonable responses and meme ones. It’s even said there are none while also acknowledging Kenya in the same response.

Im afraid to ask, but whats wrong with that line? In the right context thats fine to do no?

There is nothing wrong with it. He just didn’t know what it meant after using it for a little over a month.

no shit

“tests designed for use by people who don’t use chatgpt is performed by people who don’t”

This is the same fn calculator argument we had 20 years ago.

A tool is a tool. It will come in handy, but if it will be there in life, then it’s a dumb test

The point of learning isn’t just access to that information later. That basic understanding gets built on all the way up through the end of your education, and is the base to all sorts of real world application.

There’s no overlap at all between people who can’t pass a test without an LLM and people who understand the material.

deleted by creator

We learned that calculators hinder learning. Arithmetic is a core competency you can’t do algebra without, let alone higher math.

I really have no idea why you’re asserting the opposite so confidently. Calculators are not beneficial.

Removed by mod

Also actual mathematicians are pretty much universally capable of doing many calculations to reasonable precision in their head, because internalizing the relationships between numbers and various mathematical constructs is necessary to be able to reason about them and use them in more than trivial ways.

Tests for recall aren’t because the specific piece of information is the point. They’re because being able to retrieve the information is essential to integrate it into scenarios where you can utilize it, just like being able to do math without a calculator is needed to actually apply math in ways that aren’t

proscribedprescribed for you.I mean you’re right, but also, anybody who is an actual mathematician has no idea how to add 6+17, mostly only being concerned with “why” is 6+17, and the answer is something along the lines of bijective function space.

Source: what did I do to deserve this

Bertrand Russell tried to logically confirm that 2 + 2 is 4. You can check it in Principia Mathematica

proscribed

err… I’m finding it hard to understand the meaning of the sentence using the dictionary meaning of this word. Did you mean to use some other word?

I’d love to tell you how the hell I got there. My brain exploded I guess. I meant prescribed, in the sense that you’re following the exact script someone laid out before you.

I had a physics class in college where we spent each section working through problems to demonstrate the concepts. You were allowed a page “cheat sheet” to use on the exams, and the exams were pretty much the same problems with the numbers changed. Lots of people got As in that class. Not many learned basic physics.

A lot of people don’t get further than that in math, because they don’t understand the basic building blocks. Plugging numbers into a formula isn’t worthless, and a calculator helps that. But it doesn’t help you once the problem changes a little instead of just the inputs.

You were allowed a page “cheat sheet” to use on the exams, and the exams were pretty much the same problems with the numbers changed.

That seems like the worst way of making an exam.

In case the cheat sheet were not there, it would at least be testing something (i.e. how many formulae you memorised), albeit useless.When you let students have a cheat sheet, it is supposed to be obvious that this will be a HOTS (higher order thinking skills) test. Well, maybe for teachers lacking said HOTS, it was not obvious.

Yeah, I’m all for “you don’t have to memorize every formula”, but I would have just provided a generic formula sheet and made people at least get from there to the solutions, even if you did the same basic problems.

It’s hard for me to objectively comment on the difficulty of the material because I’d already had most of the material in high school physics and it was pretty much just basic algebra to get from any of the formulas provided to the solution, but the people following the sheets took the full hour to do the exams that took me 5 minutes without the silly cheat sheet, because they didn’t learn anything in the class.

(Edit: the wild part is that a sizable number of people in the class actually studied, like multiple hours, for that test with the exact same problems we had in class with numbers changed, while also bringing the cheat sheet where they had the full step by step solutions in for the test.)

Fun to see how this thread stemmed from “no shit”.

I didn’t mean it that way, I really just meant the discussion is idiotic

As someone who has taught math to students in a classroom, unless you have at least a basic understanding of HOW the numbers are supposed to work, the tool - a calculator - is useless. While getting the correct answer is important, I was more concerned with HOW you got that answer. Because if you know how you got that answer, then your ability to get the correct answer skyrockets.

Because doing it your way leads to blindly relying on AI and believing those answers are always right. Because it’s just a tool right?

No where did I say a kid shouldn’t learn how to do it. I said it’s a tool, I’m saying it’s a dumb argument/discussion.

If I said, students who only ever used a calculator didn’t do as well on a test where calculators werent allowed, you would say " yeah no shit"

This is just an anti technology, anti new generation separation piece that divides people and will ultimately create a rifts that help us ignore real problems.

The main goal of learning is learning how to learn, or learning how to figure new things out. If “a tool can do it better, so there is no point in not allowing it” was the metric, we would be doing a disservice because no one would understand why things work the way they do, and thus be less equipped to further our knowledge.

This is why I think common core, at least for math, is such a good thing because it teaches you methods that help you intuitively figure out how to get to the answer, rather than some mindless set of steps that gets you to the answer.

IKR?

Cheaters who cheat rather than learn don’t learn. More on this shocking development at 11.

Using ChatGPT as a study aid is cheating how?

Because a huge part about learning is actually figuring out how to extract/summarise information from imperfect sources to solve related problems.

If you use CHATGPT as a crutch because you’re too lazy to read between the lines and infer meaning from text, then you’re not exercising that particular skill.

I don’t disagree, but thats like saying using a calculator will hurt you in understanding higher order math. It’s a tool, not a crutch. I’ve used it many times to help me understand concepts just out of reach. I don’t trust anything LLMs implicitly but it can and does help me.

Congrats but there’s a reason teachers ban calculators… And it’s not always for the pain.

it’s not always for the pain

Use the rod, beat the child!

In some cases I’d argue, as an engineer, that having no calculator makes students better at advanced math and problem solving. It forces you to work with the variables and understand how to do the derivation. You learn a lot more manipulating the ideal gas formula as variables and then plugging in numbers at the end, versus adding numbers to start with. You start to implicitly understand the direct and inverse relationships with variables.

Plus, learning to directly use variables is very helpful for coding. And it makes problem solving much more of a focus. I once didn’t have enough time left in an exam to come to a final numerical answer, so I instead wrote out exactly what steps I would take to get the answer – which included doing some graphical solutions on a graphing calculator. I wrote how to use all the results, and I ended up with full credit for the question.

To me, that is the ultimate goal of math and problem solving education. The student should be able to describe how to solve the problem even without the tools to find the exact answer.

Yes.

Take a college physics test without a calculator if you wanna talk about pain. And I doubt you could find a single person who could calculate trig functions or logarithms long hand. At some point you move past the point to prove you can do arithmetic. It’s just not necessary.

The real interesting thing here is whether an LLM is useful as a study aid. It looks like there is more research necessary. But an LLM is not smart. It’s a complicated next word predictor and they have been known to go off the rails for sure. And this article suggests its not as useful and you might think for new learners.

Chem is a long forgotten memory, but trig… It’s a matter of precision to do by hand. Very far from impossible… I’m pretty sure you learn about precision before trig… maybe algebra I or ii. E.g. can you accept pi as 3.14? Or 3.14xxxxxxxxxxxxxxxxxxxxxxxxxx

Trig is just rad with pi.

There are many reasons for why some teachers do some things.

We should not forget that one of them is “because they’re useless cunts who have no idea what they’re doing and they’re just powertripping their way through some kids’ education until the next paycheck”.

Not knowing how to add 6 + 8 just because a calculator is always available isn’t okay.

I have friends in my DnD session who have to count the numbers together on their fingers. I feel bad for the person. Don’t blame a teacher for wanting you to be a smarter more efficient and productive person, for banning a calculator.

ChatGPT hallucinations inspire me to search for real references. It teaches we cannot blindly trust on things that are said. Teachers will commonly reinforce they are correct.

I do honestly have a tendency to more thoroughly verify anything AI tells me.

Yea, this highlights a fundamental tension I think: sometimes, perhaps oftentimes, the point of doing something is the doing itself, not the result.

Tech is hyper focused on removing the “doing” and reproducing the result. Now that it’s trying to put itself into the “thinking” part of human work, this tension is making itself unavoidable.

I think we can all take it as a given that we don’t want to hand total control to machines, simply because of accountability issues. Which means we want a human “in the loop” to ensure things stay sensible. But the ability of that human to keep things sensible requires skills, experience and insight. And all of the focus our education system now has on grades and certificates has lead us astray into thinking that the practice and experience doesn’t mean that much. In a way the labour market and employers are relevant here in their insistence on experience (to the point of absurdity sometimes).

Bottom line is that we humans are doing machines, and we learn through practice and experience, in ways I suspect much closer to building intuitions. Being stuck on a problem, being confused and getting things wrong are all part of this experience. Making it easier to get the right answer is not making education better. LLMs likely have no good role to play in education and I wouldn’t be surprised if banning them outright in what may become a harshly fought battle isn’t too far away.

All that being said, I also think LLMs raise questions about what it is we’re doing with our education and tests and whether the simple response to their existence is to conclude that anything an LLM can easily do well isn’t worth assessing. Of course, as I’ve said above, that’s likely manifestly rubbish … building up an intelligent and capable human likely requires getting them to do things an LLM could easily do. But the question still stands I think about whether we need to also find a way to focus more on the less mechanical parts of human intelligence and education.

LLMs likely have no good role to play in education and I wouldn’t be surprised if banning them outright in what may become a harshly fought battle isn’t too far away.

While I agree that LLMs have no place in education, you’re not going to be able to do more than just ban them in class unfortunately. Students will be able to use them at home, and the alleged “LLM detection” applications are no better than throwing a dart at the wall. You may catch a couple students, but you’re going to falsely accuse many more. The only surefire way to catch them is them being stupid and not bothering to edit what they turn in.

Yea I know, which is why I said it may become a harsh battle. Not being in education, it really seems like a difficult situation. My broader point about the harsh battle was that if it becomes well known that LLMs are bad for a child’s development, then there’ll be a good amount of anxiety from parents etc.

Kids using an AI system trained on edgelord Reddit posts aren’t doing well on tests?

Ya don’t say.

Like any tool, it depends how you use it. I have been learning a lot of math recently and have been chatting with AI to increase my understanding of the concepts. There are times when the textbook shows some steps that I don’t understand why they’re happening and I’ve questioned AI about it. Sometimes it takes a few tries of asking until you figure out the right question to ask to get the right answer you need, but that process of thinking helps you along the way anyways by crystallizing in your brain what exactly it is that you don’t understand.

I have found it to be a very helpful tool in my educational path. However I am learning things because I want to understand them, not because I have to pass a test and that determination in me to want to understand is a big difference. Just getting hints to help you solve the problem might not really help in the long run, but it you’re actually curious about what you’re learning and focus on getting a deeper understanding of why and how something works rather than just getting the right answer, it can be a very useful tool.

Why are you so confident that the things you are learning from AI are correct? Are you just using it to gather other sources to review by hand or are you trying to have conversations with the AI?

We’ve all seen AI get the correct answer but the show your work part is nonsense, or vice versa. How do you verify what AI outputs to you?

You check it’s work. I used it to calculate efficiency in a factory game and went through and made corrections to inconsistencies I spotted. Always check it’s work.

Exactly. It’s a helpful tool but it needs to be used responsibly. Writing it off completely is as bad a take as blindly accepting everything it spits out.

I use it for explaining stuff when studying for uni and I do it like this: If I don’t understand e.g. a definition, I ask an LLM to explain it, read the original definition again and see if it makes sense.

This is an informal approach, but if the definition is sufficiently complex, false answers are unlikely to lead to an understanding. Not impossible ofc, so always be wary.

For context: I’m studying computer science, so lots of math and theoretical computer science.

I’m not at all confident in the answers directly. I’ve gotten plenty of wrong answers form AI and I’ve gotten plenty of correct answers. If anything it’s just more practice for critical thinking skills, separating what is true and what isn’t.

When it comes to math though, it’s pretty straightforward, I’m just looking for context on some steps in the problems, maybe reminders of things I learned years ago and have forgotten, that sort of thing. As I said, I’m interested in actually understanding the stuff that I’m learning because I am using it for the things I’m working on so I’m mainly reading through textbooks and using AI as well as other sources online to round out my understanding of the concepts. If I’m getting the right answers and the things I am doing are working, it’s a good indicator I’m on the right path.

It’s not like I’m doing cutting edge physics or medical research where mistakes could cause lives.

Its sort of similar to saying poppy production overall is pretty negative, but if smart critical people use it sparingly and apprehensively, opiates could be of great benefit to that person.

Thats all well and good and all but AI is not being developed to help critical thinkers research slightly easier, its being created to reduce the amount of money companies spend on humans.

Until regulations are in place to guide the development of the technology in useful ways then I dont know any of it should be permitted. What’s the rush for anyways?

Well I’m definitely not pushing for more AI and I like to try to stay nuanced on the topic. Like I mentioned in my first comment I have found it to be a very helpful tool but if used in other ways it could do more harm than good. I’m not involved in making or pushing AI but as long as it is an available tool I’m going to make use of it in the most responsible way I can and talk about how I use it knowing that I can’t control what other people do but maybe I could help some people who are only using it to get answer hints like in the article to find more useful ways of using it.

When it comes to regulation, yeah I’m all for that. It’s a sad reality that regulation always lags behind and generally doesn’t get implemented until there’s some sort of problem that scares the people in power who are mostly too old to understand what’s happening anyways.

And as to what’s the rush, I would say a combination of curiosity and good intentions mixed with the worst of capitalism, the carrot of financial gain for success and the stick of financial ruin for failure and I don’t have a clue what percent of the pie each part makes up. I’m not saying it’s a good situation but it’s the way things go and I don’t think anyone alive could stop it. Once something is out of the bag, there ain’t any putting it back.

Basically I’m with you that it will be used for things that make life worse for people and that sucks, and it would be great if that was not the case but that doesn’t change the fact that I can’t do anything about that and meanwhile it can still be a useful tool and so I’m going to use it the best that I can regardless how others use it because there’s really nothing I can do except keep pushing forward the best I can, just like anyone else.

It might just be the difference in perspective. I agree with your assessments if how things are but not how they will be in the future. There are countries that are more responsible in their research, so I know its possible. Its all politics and I dont believe in giving up on social change just yet.

I personally use it’s answers as a jumping off point to do my own research, or I ask it for sources directly about things and check those out. I frequently use LLMs for learning about topics, but definitely don’t take anything they say at face value.

For a personal example, I use ChatGPT as my personal Japanese tutor. I use it discuss and break down nuances of various words or sayings, names of certain conjugation forms etc. etc., and it is absolutely not 100% correct, but I can now take the names of things that it gives me in native Japanese that I never would have known and look them up using other resources. Either it’s correct and I find confirming information, or it’s wrong and I can research further independently or ask it follow up questions. It’s certainly not as good as a human native speaker, but for $20 a month and as someone who likes enjoys doing their own research, I fucking love it.

Hey, that’s a cool thing to do! I’ll try it. Learning a new language through LLMs sounds cool.

It is! Just be aware that it won’t always be right. It’s good to verify things with additional sources (as with anything, really).

He is cross checking

I, like the OP, was also studying math from a textbook and using GPT4 to help clear things up. GPT4 caught an error in the textbook.

The LLM doesn’t have a theory of mind, it wont start over and try to explain a concept from a completely new angle, it mostly just repeats the same stuff over and over. Still, once I have figured something out, I can ask the LLM if my ideas are correct and it sometimes makes small corrections.

Overall, most of my learning came from the textbook, and talking with the LLM about the concepts I had learned helped cement them in my brain. I didn’t learn a whole lot from the LLM directly, but it was good enough to confirm what I learned from the textbook and sometimes correct mistakes.

If you didn’t have AI, what would you have done instead?

I mean, why are you confident the work in textbooks is correct? Both have been proven unreliable, though I will admit LLMs are much more so.

The way you verify in this instance is actually going through the work yourself after you’ve been shown sources. They are explicitly not saying they take 1+1=3 as law, but instead asking how that was reached and working off that explanation to see if it makes sense and learn more.

Math is likely the best for this too. You have undeniable truths in math, it’s true, or it’s false. There are no (meaningful) opinions on how addition works other than the correct one.

The problem with this style of verification is that there is no authoritative source. Neither the AI nor yourself is capable of verifying for accuracy. The AI also has no expectation of being accurate or revised.

I don’t see how this is any better than running google searches on reddit or other message boards looking for relevant discussions and basing your knowledge on those.

If AI was enabling something new that might be worth it but allowing someone to find slightly less/more shitty message board posts 10% more efficiently isnt worth what’s happening. There are countries that are capable of regulation as a field fills out, why can’t america? We banned tiktok in under a month didnt we?

Sometimes it leads me wildly astray when I do that, like a really bad tutor…but it is good if you want a refresher and can spot the bullshit on the side. It is good for spotting things that you didnt know before and can factcheck afterwards.

…but maybe other review papers and textbooks are still better…

deleted by creator

This! Don’t blame the tech, blame the grown ups not able to teach the young how to use tech!

The study is still valuable, this is a math class not a technology class, so understanding it’s impact is important.

Yea, did not read that promptengineered chatGPT was better than non chatGPT class 😄 but I guess that proofs my point as well, because if students in group with normal chatGPT were teached how to prompt normal ChatGPT so that it answer in a more teacher style, I bet they would have similar results as students with promtengineered chatGPT

Can I blame the tech for using massive amounts of electricity, making e.g. Ireland use more fossil fuels again?

Well, I guess, I mean the evidence is clear, isn’t it?

If you actually read the article you will see that they tested both allowing the students to ask for answers from the LLM, and then limiting the students to just ask for guidance from the LLM. In the first case the students did significantly worse than their peers that didn’t use the LLM. In the second one they performed the same as students who didn’t use it. So, if the results of this study can be replicated, this shows that LLMs are at best useless for learning and most likely harmful. Most students are not going to limit their use of LLMs for guidance.

You AI shills are just ridiculous, you defend this technology without even bothering to read the points under discussion. Or maybe you read an LLM generated summary? Hahahaha. In any case, do better man.

Obviously no one’s going to learn anything if all they do is blatantly asking for an answer and writings.

You should try reading the article instead of just the headline.

deleted by creator

If you’d have read tye article, you would have learned that there were three groups, one with no gpt, one where they just had gpt access, and another gpt that would only give hints and clues to the answer, but wouldn’t directly give it.

That third group tied the first group in test scores. The issue was that chat gpt is dumb and was often giving incorrect instructions on how to solve the answer, or came up with the wrong answer. I’m sure if gpt were capable of not giving the answer away and actually correctly giving instructions on how to solve each problem, that group would have beaten the no gpt group, easily.

Of all the students in the world, they pick ones from a “Turkish high school”. Any clear indication why there of all places when conducted by a US university?

I’m guessing there was a previous connection with some of the study authors.

I skimmed the paper, and I didn’t see it mention language. I’d be more interested to know if they were using ChatGPT in English or Turkish, and how that would affect performance, since I assume the model is trained on significantly more English language data than Turkish.

GPTs are designed with translation in mind, so I could see it being extremely useful in providing me instruction on a topic in a non-English native language.

But they haven’t been around long enough for the novelty factor to wear off.

It’s like computers in the 1980s… people played Oregon Trail on them, but they didn’t really help much with general education.

Fast forward to today, and computers are the core of many facets of education, allowing students to learn knowledge and skills that they’d otherwise have no access to.

GPTs will eventually go the same way.

The paper only says it’s a collaboration. It’s pretty large scale, so the opportunity might be rare. There’s a chance that (the same or other) researchers will follow up and experiment in more schools.

The names of the authors suggest there could be a cultural link somewhere.

Ah thanks, that does appear to be the case.

If I had access to ChatGPT during my college years and it helped me parse things I didn’t fully understand from the texts or provided much-needed context for what I was studying, I would’ve done much better having integrated my learning. That’s one of the areas where ChatGPT shines. I only got there on my way out. But math problems? Ugh.

When you automate these processes you lose the experience. I wouldn’t be surprised if you couldn’t parse information as well as you can now, if you had access to chat GPT.

It’s had to get better at solving your problems if something else does it for you.

Also the reliability of these systems is poor, and they’re specifically trained to produce output that appears correct. Not actually is correct.

I read that comment, and use it similarly, as more a super-dictionary/encyclopedia in the same way I’d watch supplementary YouTube videos to enhance my understanding. Rather than automating the understanding process.

More like having a tutor who you ask all the too-stupid and too-hard questions to, who never gets tired or fed up with you.

Exactly this! That is why I always have at least one instance of AI chatbot running when I am coding or better said analyse code for debugging.

It makes it possible to debug kernel stuff without much pre-knowledge, if you are proficient in prompting your questions. Well, it did work for me.

I quickly learned how ChatGPT works so I’m aware of its limitations. And since I’m talking about university students, I’m fairly sure those smart cookies can figure it out themselves. The thing is, studying the biological sciences requires you to understand other subjects you haven’t learned yet, and having someone explain how that fits into the overall picture puts you way ahead of the curve because you start integrating knowledge earlier. You only get that from retrospection once you’ve passed all your classes and have a panoramic view of the field, which, in my opinion, is too late for excellent grades. This is why I think having parents with degrees in a related field or personal tutors gives an incredibly unfair advantage to anyone in college. That’s what ChatGPT gives you for free. Your parents and the tutors will also make mistakes, but that doesn’t take away the value which is also true for the AIs.

And regarding the output that appears correct, some tools help mitigate that. I’ve used the Consensus plugin to some degree and think it’s fairly accurate for resolving some questions based on research. What’s more useful is that it’ll cite the paper directly so you can learn more instead of relying on ChatGPT alone. It’s a great tool I wish I had that would’ve saved me so much time to focus on other more important things instead of going down the list of fruitless search results with a million tabs open.

One thing I will agree with you is probably learning how to use Google Scholar and Google Books and

pirating booksusing the library to find the exact information as it appears in the textbooks to answer homework questions which I did meticulously down to the paragraph. But only I did that. Everybody else copied their homework, so at least in my university it was a personal choice how far you wanted to take those skills. So now instead of your peers giving you the answers, it’s ChatGPT. So my question is, are we really losing anything?Overall I think other skills need honing today, particularly verifying information, together with critical thinking which is always relevant. And the former is only hard because it’s tedious work, honestly.

The study was done in Turkey, probably because students are for sale and have no rights.

It doesn’t matter though. They could pick any weird, tiny sample and do another meaningless study. It would still get hyped and they would still get funding.

TLDR: ChatGPT is terrible at math and most students just ask it the answer. Giving students the ability to ask something that doesn’t know math the answer makes them less capable. An enhanced chatBOT which was pre-fed with questions and correct answers didn’t screw up the learning process in the same fashion but also didn’t help them perform any better on the test because again they just asked it to spoon feed them the answer.

references

ChatGPT’s errors also may have been a contributing factor. The chatbot only answered the math problems correctly half of the time. Its arithmetic computations were wrong 8 percent of the time, but the bigger problem was that its step-by-step approach for how to solve a problem was wrong 42 percent of the time.

The tutoring version of ChatGPT was directly fed the correct solutions and these errors were minimized.

The researchers believe the problem is that students are using the chatbot as a “crutch.” When they analyzed the questions that students typed into ChatGPT, students often simply asked for the answer.

Haven’t seen that in ages. Thanks.

No worries. Somehow I use it quite often nowadays

God I miss ytmnd https://owleyes.ytmnd.com/

deleted by creator

In the study they said they used a modified version that acted as a tutor, that refused to give direct answers and gave hints to the solution instead.

So it’s still not surprising since ChatGPT doesn’t give you factual information. It just gives you what it statistically thinks you want to read.

That’s like cheating with extra steps.

Ain’t getting hints on your in class exam.

You could always try reading the article

Which, in a fun bit of meta, is a decent description of artificial “intelligence” too.

Maybe the real ChatGPT was the children we tested along the way

I’m not entirely sold on the argument I lay out here, but this is where I would start were I to defend using chatGPT in school as they laid out in their experiment.

It’s a tool. Just like a calculator. If a kid learns and does all their homework with a calculator, then suddenly it’s taken away for a test, of course they will do poorly. Contrary to what we were warned about as kids though, each of us does carry a calculator around in our pocket at nearly all times.

We’re not far off from having an AI assistant with us 24/7 is feasible. Why not teach kids to use the tools they will have in their pocket for the rest of their lives?

I think here you also need to teach your kid not to trust unconditionally this tool and to question the quality of the tool. As well as teaching it how to write better prompts, this is the same like with Google, if you put shitty queries you will get subpar results.

And believe me I have seen plenty of tech people asking the most lame prompts.

I remember teachers telling us not to trust the calculators. What if we hit the wrong key? Lol

Some things never change.

I remember the teachers telling us not to trust Wikipedia, but they had utmost faith in the shitty old books that were probably never verified by another human before being published.

i mean, usually wikipedia’s references ARE from those old books

Eh I find they’re usually from a more direct source. The schoolbooks are just information sourced from who knows where else.

I don’t know about your textbooks and what ages you’re referring to but I remember many of my technical textbooks had citations in the back.

Yep, students these days have no idea about the back of their books and how useful the index can be and the citations after that.

Even after repeatedly pointing it out, they still don’t make use of it. Despite the index being nearly a cheat code in itself.

Human error =/= machine unreliability

You’re right. The commenter who made the comparison to Wikipedia made a better point.

As adults we are dubious of the results that AI gives us. We take the answers with a handful of salt and I feel like over the years we have built up a skillset for using search engines for answers and sifting through the results. Kids haven’t got years of experience of this and so they may take what is said to be true and not question the results.

As you say, the kids should be taught to use the tool properly, and verify the answers. AI is going to be forced onto us whether we like it or not, people should be empowered to use it and not accept what it puts out as gospel.

This is true for the whole internet, not only AI Chatbots. Kids need to get teached that there is BS around. In fact kids had to learn that even pre-internet. Every human has to learn that you can not blindly trust anything, that one has to think critically. This is nothing new. AI chatbots just show how flawed human education is these days.

It’s a tool. Just like a calculator.

lol my calculator never “hallucinated”.

Yeah it’s like if you had a calculator and 10% of the time it gave you the wrong answer. Would that be a good tool for learning? We should be careful when using these tools and understand their limitations. Gen AI may give you an answer that happens to be correct some of the time (maybe even most of the time!) but they do not have the ability to actually reason. This is why they give back answers that we understand intuitively are incorrect (like putting glue on pizza), but sometimes the mistakes can be less intuitive or subtle which is worse in my opinion.

Ask your calculator what 1-(1-1e-99) is and see if it never halucinates (confidently gives an incorrect answer) still.